All Issues

Unmanned aerial systems for agriculture and natural resources

Publication Information

California Agriculture 71(1):5-14. https://doi.org/10.3733/ca.2017a0002

Published online February 09, 2017

NALT Keywords

Summary

From farm to ranch to forest, the age of drones is here.

Full text

Small unmanned aerial systems (UAS), also known as drones or unmanned aerial vehicles, have a rapidly growing role in research and practice in agriculture and natural resources. Here, we present the parameters and key limitations of the technology, summarize current regulations and cover examples of University of California research enabled by UAS technology.

The Inspire 1 drone, made by DJI, flies with an RGB camera over the UC Berkeley Blue Oak Ranch Reserve in Santa Clara County.

Motorized UAS were introduced as a potential remote sensing tool for scientific research in the late 1970s. However, due to a variety of limitations (the weight and limited functionality of available sensors and cameras, the lack of GPS-guided autopilots and so on) these platforms had few practical applications (Przybilla and Wester-Ebbinghaus 1979; Wester-Ebbinghaus 1980; cited by Colomina and Molina 2014). For years, UAS technology was led by military needs and applications. The relatively few applications in research and agriculture included deployments in Japan for crop dusting and in Australia for meteorological studies (Colomina and Molina 2014).

In the past decade, several factors have greatly increased the utility and ease of use of UAS, while prices have fallen. Consumer demand drove the hobby craft industry to make major improvements in UAS vehicles. Integrating improved battery technology, miniature inertia measurement units (IMU, initially developed for smartphones), GPS and customizable apps for smartphones and tablets has delivered improved flight longevity, reliability, ease of use and the ability to better utilize cameras and other sensors needed for applications in agriculture and natural resources (see below, Types of UAS). Innovations in sensor technology now include dozens of models of lightweight visible-spectrum and multi-spectrum cameras capable of capturing reliable, scientifically valid data from UAS platforms (see UAS Sensors) (Whitehead and Hugenholtz 2014). Meanwhile, the Federal Aviation Administration (FAA) has helped facilitate increased UAS use, with rule changes adopted in August 2016 that lowered what have previously been significant regulatory obstacles to the legal use of UAS for research and commercial purposes (see Regulations sidebar).

UC faculty throughout California are using UAS in a wide range of agricultural and environmental research projects — from grazed rangelands to field crops and orchards, forests, lakes and even the ice sheets of Greenland (see below, Research case studies). UAS also have become a part of the curriculum across the UC system, and are increasingly used by campus staff in departments from facilities to athletics to marketing (see UAS at UC sidebar).

Andreas Anderson, an instructor with the Center for Information Technology Research in the Interest of Society at UC Merced, checks the control systems for a drone-mounted multispectral camera before a research flight in Merced County for a study on water stress in almond trees.

UAS are already in wide use in agriculture, and the sector is projected to continue to account for a large share — 19% in the near term, per a recent FAA report (FAA 2016a) — of the commercial UAS market in the United States. The use of UAS for research, particularly remote sensing and mapping, is soaring: A search in Scopus (2016) finds 3,079 articles focused on UAS or UAV applications in 2015, compared with 769 in 2005. Across all commercial uses, the FAA estimates 2016 sales of commercial UAS (including those used for research purposes) at 600,000 units and expects that figure to balloon to 2.5 million units annually as soon as 2017 (FAA 2016a).

Regulations

The U.S. Federal Aviation Administration (FAA) provides guidance and regulation for U.S. airspace. The agency has adopted different rules for recreational and nonrecreational uses of UAS.

The recreational use of UAS is regulated by Title 14, Part 101 of the Code of Federal Regulations commonly known as the “model aircraft” regulations. These regulations apply only if the operator is not compensated in any way for the UAS operation, and if the flight is not incidental to a business purpose regardless of compensation (so, for instance, farming-related uses of UAS do not count as recreational, even if the UAS is operated by the grower or a farm employee). Recreational UAS operators are not required to have a license, and must comply only with basic safety rules such as:

-

Fly at or below 400 feet

-

Keep your UAS within sight

-

Never fly near other aircraft, especially near airports

-

Never fly over groups of people

-

Never fly over stadiums or sports events

-

Never fly near emergency response efforts such as fires

-

Never fly under the influence

-

Be aware of airspace requirements (FAA 2016b)

Nonrecreational use — defined as deployment of a UAS for any type of “work, business purposes, or for compensation or hire” (FAA 2016c) — falls under a different set of rules, those for “small unmanned aircraft” of up to 55 pounds: Title 14, Part 107 of the Code of Federal Regulations. These rules were updated in several important ways in August 2016.

Previously, the only legal way to fly a UAS for nonrecreational use was with a FAA Certificate of Authorization (COA) for each aircraft issued under Section 333 of the FAA Modernization and Reform Act of 2012 (FAA 2016d). Obtaining a COA was difficult (often requiring the operator to hold a pilot's license for manned aircraft) and time-consuming, requiring abundant documentation followed by FAA processing times of several months. Once a COA was granted, the pilot was typically required to file a notice to airmen (NOTAM) with the FAA prior to every flight; many businesses and institutions were hesitant to authorize flights due to concerns of liability, given the absence of standardized safety guidelines.

The new rules eliminate the need for a manned pilot's license, replacing it with a requirement that nonrecreational operators hold a newly created type of license specifically for UAS operation. This license is obtained after passing an “Unmanned Aircraft –General” aeronautical knowledge test at an FAA-approved knowledge testing center (the test fee is $150). The FAA estimates that the average applicant will spend 20 hours for self-study in preparation for the two-hour exam, and anticipates that 90% of applicants will pass the exam on the first try. By comparison, obtaining a manned pilot's license costs thousands of dollars for instruction, in-flight-training and exam fees. In addition, the new rules create a simple online registration process for commercial and research UAS ($5 per UAS) (FAA 2016e).

The FAA does not generally require logging of flight data on a per flight basis, unless operating under a specific waiver (e.g., nighttime flight, under terms that will specified in that specific waiver). Per flight logging and reporting is required for UC-affiliated researchers, who must submit flight information to the UC Center of Excellence for Unmanned Aircraft Systems Safety for the UC to track its UAS operations (ehs.ucop.edu/drones).

The full list of rules governing UAS flight for research and commercial purposes is provided on the FAA website.

Despite the growing ubiquity of UAS, a variety of practical and scientific challenges remain to using the technology effectively.

Collecting and processing data that is useful for management decisions requires a disparate range of skills and knowledge — understanding the relevant regulations, determining what sensing technology and UAS to use for the problem at hand, developing a data collection plan, safely piloting the UAS, managing the large data sets generated by the sensors, selecting and then using the appropriate image-processing and mapping software, and interpreting the data.

In addition, as highlighted in the research cases presented below, much science remains to be done to develop reliable methods for interpreting and processing the data gathered by UAS sensors, so that a user can know with confidence that the changes or patterns detected by a UAS camera reflect reality.

The UC Agriculture and Natural Resources (ANR) Informatics and GIS (IGIS) program has recently incorporated drone services into the portfolio of support that it offers to UC ANR and its affiliated UC Agricultural Experiment Station faculty. You can find out more about these services and UC affiliates can submit service requests via the IGIS website, igis.ucanr.edu . Working closely with UC Office of the President, Center of Excellence on Unmanned Aircraft System Safety (UCOP 2016), IGIS has also developed a workshop curriculum around UAS technology, regulations and data processing, which is open to members of the UC system as well as the public. Please check the IGIS website to learn about upcoming training events around the state in 2017, including a three day “DroneCamp” that will intensively cover drone technology, regulations and data processing.

Author Sean Hogan discusses drone technology with a group of managers from the University of California Natural Reserve System during a field day in October at the UC Berkeley Blue Oak Ranch Reserve, Santa Clara County.

UAS at UC

UAS are becoming part of the standard curriculum across the UC system, helping to prepare students to plug into a sector that is expected to generate thousands of new jobs in the coming years (more than 12,000 by 2017 in California alone, per a 2013 study (AUVSI 2013)). Here are several examples:

-

UC Berkeley: Electrical Engineering and Computer Science 98/198, Unmanned Aerial Vehicles Flight Control and Assembly

-

UC Davis: Aerospace Science and Engineering 10, Drones and Quadcopters; Geography 298, Environmental Monitoring and Research with Small UAS

-

UC Irvine: Engineering 7, Introduction to Engineering

-

UCLA: Architecture and Urban Design students fly drones to collect images that are used to create 3-D visualizations of projects

-

UC Merced: Mechanical Engineering 190, Unmanned Aerial Systems

-

UC San Diego: Course credit for competing in the American Institute of Aeronautics and Astronautics (AIAA) Design, Build, Fly competition

-

UC Agriculture and Natural Resources: The IGIS program provides workshops on UAS technology and regulations across California in collaboration with the UC Berkeley Geospatial Innovation Facility that are UC oriented but also open to the public (igis.ucanr.edu/IGISTraining)

Campus staff, too, are using UAS in a wide range of applications — from monitoring construction projects and inspecting buildings to shooting video for marketing and sports programs.

Changes in August 2016 to the federal regulations governing UAS operation (see Regulations sidebar) make it far easier to legally operate a UAS for nonrecreational purposes. Based on anecdotal information tracked by the UC Center of Excellence on Unmanned Aircraft System Safety, UAS use has grown dramatically across multiple UC campuses in the few months since the adoption of the new rules.

Unmanned aerial systems and the sensors they carry

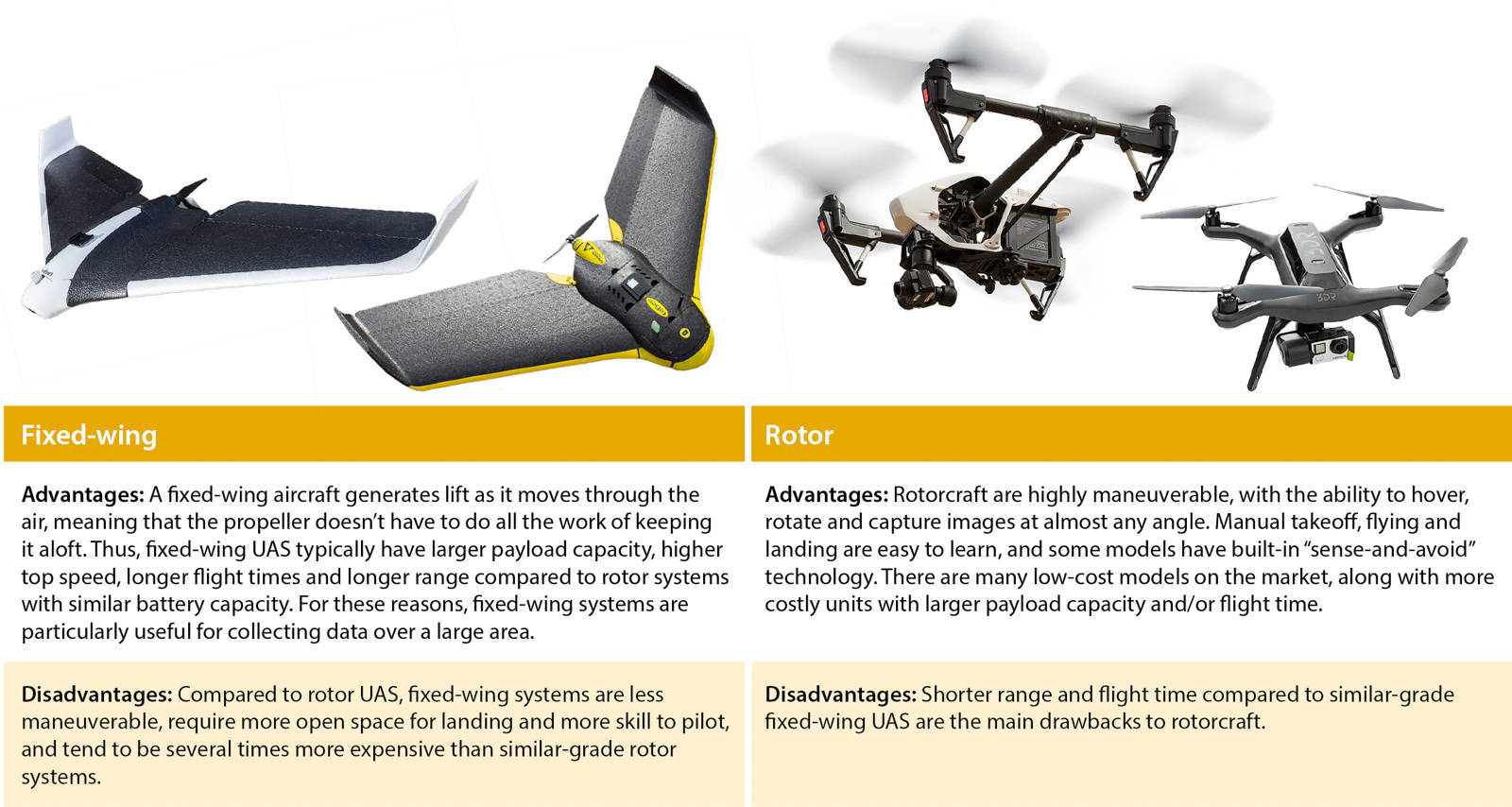

Types of UAS

Autopilots

Controller for a DJI Inspire 1 drone. The attached tablet shows an inflight view and other information.

Both fixed-wing and rotor UAS can be flown manually, but nonrecreational users rely primarily on what are known as “integrated flight systems” that enable safe precision flying, improved stability control and the ability to precisely replicate data-collection flights. These systems typically include GPS-enabled autopilots, inertial measurement units (IMU) to monitor the aircraft's orientation, battery-monitor systems to ensure that the UAS reserves enough charge to fly “home” and systems that attempt to land in the event of an emergency.

Controller for a 3D Robotics Solo drone. The attached Android tablet displays a programmed flight plan.

Flights are generally planned and executed through a tablet or phone application. GPS way-points along flight paths can function as trigger points that activate or deactivate an on-board sensor. The autopilot systems are flexible and can be altered in midflight, for instance, by activating preset flight commands such as loiter (stay in one place), circle, land or return to home.

Integrated flight systems also can be programmed to assist during manually controlled flights by limiting flight speeds and flight distances. Recent advances in these systems include object avoidance systems and built-in maps of restricted airspace. Continuing improvements in the technical integration of flight controllers, UAS firmware (the control-system code in the UAS itself) and sensor software should result in safer and more reliable UAS that can further reduce safety issues and data-collection problems arising from user error.

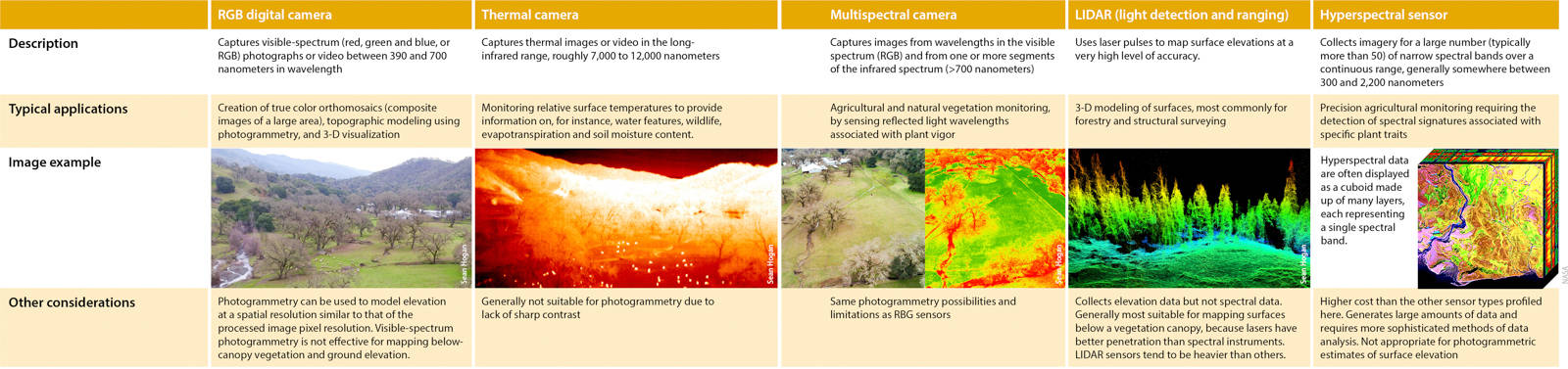

UAS sensors

The type of sensor that a UAS can carry is determined by the UAS's designed payload capacity. Any type of instrument may be used as long as it's light enough for a given UAS platform. Most conventional UAS have a maximum payload between 300 and 1,500 grams (0.66 to 3.3 pounds). There is a tradeoff between instrument payload and fight time, especially for rotorcraft.

Most sensors can transmit a live video stream to a base station receiver for the pilot or pilot's observer to monitor. This functionality drives much of the public's interest in UAS and has been useful for search and rescue and police applications. For most scientific purposes, however, the data are recorded for later visualization and analysis.

Research case studies

Crop agriculture

Seeing signs of stress in orchards

When a tree is stressed — whether due to pest infestation, nutrient deficiency or insufficient water — its leaves change. These changes may be detectable in the visible light spectrum — a shift in a leaf's shade of green. They can also be “seen” in other bands of the electromagnetic spectrum — for example, a change in the texture of a leaf's waxy coating may alter how infrared light is reflected.

Different types of stress generate unique electromagnetic “signatures.” If these signatures can be reliably correlated with specific causes, a UAS could be deployed to quickly scan a large orchard for signs of trouble, enabling early detection and treatment of pest infestations and other problems.

Robert Starnes, a senior superintendent of agriculture in the UC Davis Department of Entomology, flies a drone over a field of strawberries in San Luis Obispo County to study how reflectance data may help detect outbreaks of spider mite, a common pest.

Christian Nansen, a professor of entomology and nematology at UC Davis, leads a team working to refine this monitoring technique. They use hyperspectral camera, which generates a very high-resolution signature across a wide range of wavelengths. One of the challenges is that the electromagnetic signatures often contain high degrees of data “noise” — due to shadows, dust on leaves, differences between leaves and other factors — making it difficult to discern a clear signal associated with the stress that the tree is experiencing. To address this problem, Nansen's team is refining a combination of advanced calibration, correction and data filtering techniques. As entomologists, they are also working to understand in fine detail the interactions between different pest species and tree stress, and how those affect the electromagnetic signature of a tree's leaves (Christian Nansen, UC Davis, chrnansen.wix.com/nansen2).

Detecting deficiencies in almonds and onions

Rapid detection of water stress can help farmers optimize irrigation water applications and improve crop yields. In an orchard, precise assessments of water stress typically require manual measurements at individual trees using a device known as a pressure bomb that measures water tension in individual leaves. Tiebiao Zhao, a graduate student at UC Merced's Mechatronics, Embedded Systems and Automation (MESA) Laboratory, is collaborating with UC ANR Merced County pomology farm advisor David Doll with the goal of developing UAS-based tools to assess water stress across a large almond orchard at a high level of accuracy. Water stress can be detected by relatively low-cost multispectral cameras due to changes in how the canopy reflects near-infrared light. This project is building a database of canopy spectral signatures and water-stress measurements with the objective of developing indices that can be used to reliably translate UAS imagery into useful water-stress information.

Visible-spectrum, left, and near-infrared (NIR), right, images of an almond orchard in Merced County. UC researchers are developing methods to use NIR imagery to quickly and accurately detect areas of water stress.

In a related experiment, Zhao is working with Dong Wang of the USDA Agriculture Research Service (ARS) San Joaquin Valley Agricultural Sciences Center to detect the effects of varying irrigation levels and biomass soil amendments on crop development and yield in onions. As in Zhao's almond experiment, the researchers are comparing spectral signatures gathered by low-cost UAS-mounted multispectral cameras with ground-truth data to better understand the relationship between the two (Tiebiao Zhao, UC Merced, mechatronics.ucmerced.edu).

Andreas Anderson, an instructor with the Center for Information Technology Research in the Interest of Society at UC Merced, carefully lands a drone following a research flight in an almond orchard in Merced County.

Natural resources

Mapping the Greenland ice sheet

The Greenland ice sheet covers 656,000 square miles and holds roughly 2.3 trillion acre-feet of water — the sea level equivalent of 24 feet. As the climate warms, ice sheet melt accelerates; therefore, understanding the processes involved is important. This knowledge can help to refine predictions about the ice sheet's future and its contribution to global sea level rise.

Above left, a 3D Robotics Solo drone carrying a Canon point-and-shoot camera flies a mapping mission over a lake in western Greenland the day after a jökulhlaup (a glacial outburst flood). Above right, precise ground control point surveys are needed to accurately geolocate imagery collected with a drone and produce high quality orthomosaics. The photo shows Rutgers University doctoral student Sasha Leidman conducting a differential GPS survey of a ground control marker on the Greenland ice sheet.

A team of researchers led by UCLA professor of geography Laurence Smith is using UAS-based imaging technologies to map and monitor meltwater generation, transport and export. The group's UAS carry multiband visible and near-infrared digital cameras that capture sub-meter resolution data, from which the researchers create multiple orthomosaics of the ice surface and perimeter over time.They are using the data to analyze a number of different cryohydrologic processes and features, including mapping rivers on the ice surface from their origins to their termination at moulins — vertical conduits that connect the ice surface with en- and sub-glacial drainage networks — and meltwater outflow to the ocean. The team is also generating digital elevation models of the ice surface to extract hydrologic features, micro topography and drainage divides. In addition, they are working towards mapping ice surface impurities and albedo (the measure of the fraction of the sun's energy reflected by the ice surface) at high resolution using multi-band visible and near-infrared images. Accurate and high resolution albedo data is important for modeling surface meltwater runoff on the ice sheet. Contributors to the project include UCLA doctoral students Matthew Cooper and Lincoln Pitcher, UCLA postdoctoral researcher Kang Yang, Rutgers University doctoral student Sasha Leidman and Aberystwyth University (UK) doctoral student Johnny Ryan (see also Ryan et al. 2015, Ryan et al. 2016, Ryan in preparation).

To monitor meltwater fluxes across the surface of the Greenland ice sheet, researchers generated orthomosaics like this one from digital imagery shot by a Canon point-and-shoot camera mounted on a 3D Robotics Solo drone.

3-D thermal mapping of water bodies

A drone-mounted thermal sensor can monitor temperature in a water body or watercourse at various depths and times of day, helping to identify habitat zones for aquatic species.

Researchers at UC Berkeley, including professor Sally Thompson's group in the Department of Civil and Environmental Engineering, are using UAS as a novel thermal sensing platform. Working with robotics experts at the University of Nebraska, Lincoln, the team tested an unmanned system capable of lowering a temperature sensor into a water body to record temperature measurements throughout the column of water — which is useful in, for instance, identifying habitat zones for aquatic species. Initial field experiments that compared in situ temperature measurements with those made from the UAS platform indicate that UAS may support improved high-resolution 3-D thermal mapping of water bodies in a manageable timeframe (i.e., 2 hours) sufficient to resolve diurnal variations (Chung et. al. 2015). More recent work has confirmed the viability of mapping thermal refugia for cold-water fish species from this platform.

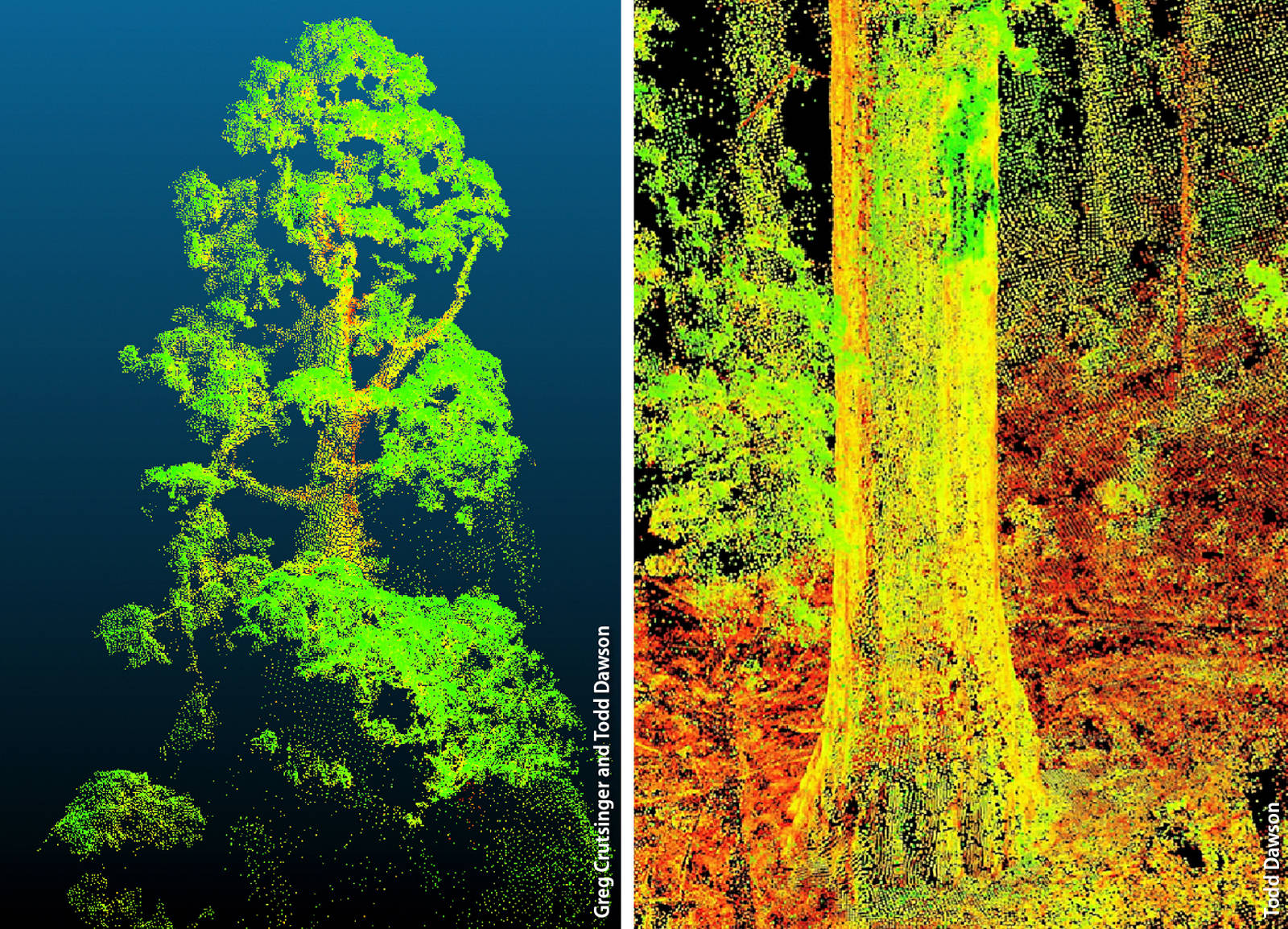

Mapping tree architecture

UC Berkeley professor Todd Dawson (departments of Integrative Biology and Environmental Science, Policy and Management) and his redwood science group are using UAS-mounted multispectral cameras to create 3-D maps of giant sequoias — trunks, branches and foliage — at higher resolution and with far less labor than was previously possible.

The maps were developed through a partnership with Parrot Inc. The company builds the cameras and UAS used in the research, and partners to manage the software, Pix4D, that was specially designed to analyze the images.

Precise 3-D maps of trees, like these of giant sequoias generated from multispectral images using Parrot Inc.'s Pix4D software, can yield information on water and carbon dioxide uptake, forest microclimates and forest carbon stocks.

The maps have a range of potential applications, from climate science to forest ecology. Knowing the total leaf area and aboveground biomass of a tree and the structure of its canopy, for instance, allows researchers to calculate daily carbon dioxide and water uptake — important variables in assessing the interactions between trees, soil and atmosphere as the climate changes. A high-resolution map also yields information about a tree's influences on its immediate environment — how much leaf litter falls to the forest floor, for instance, and to what degree shade from the canopy influences the microclimate around the tree, or the habitats in it.

Another application: A precise map of a tree also provides a good estimate of how much carbon is stored in it as woody biomass. This information, in turn, can be combined with information from coarser (and faster) methods of forest mapping, such as LIDAR, to improve estimates of the carbon stored in a large forested area. Mapping every tree in a forest at a high level of detail isn't practical. But such maps of a sample of trees can provide good correlations between carbon mass and a variable like tree height, which LIDAR can measure to a high degree of accuracy — yielding a better estimate of the total amount of carbon in the forest.

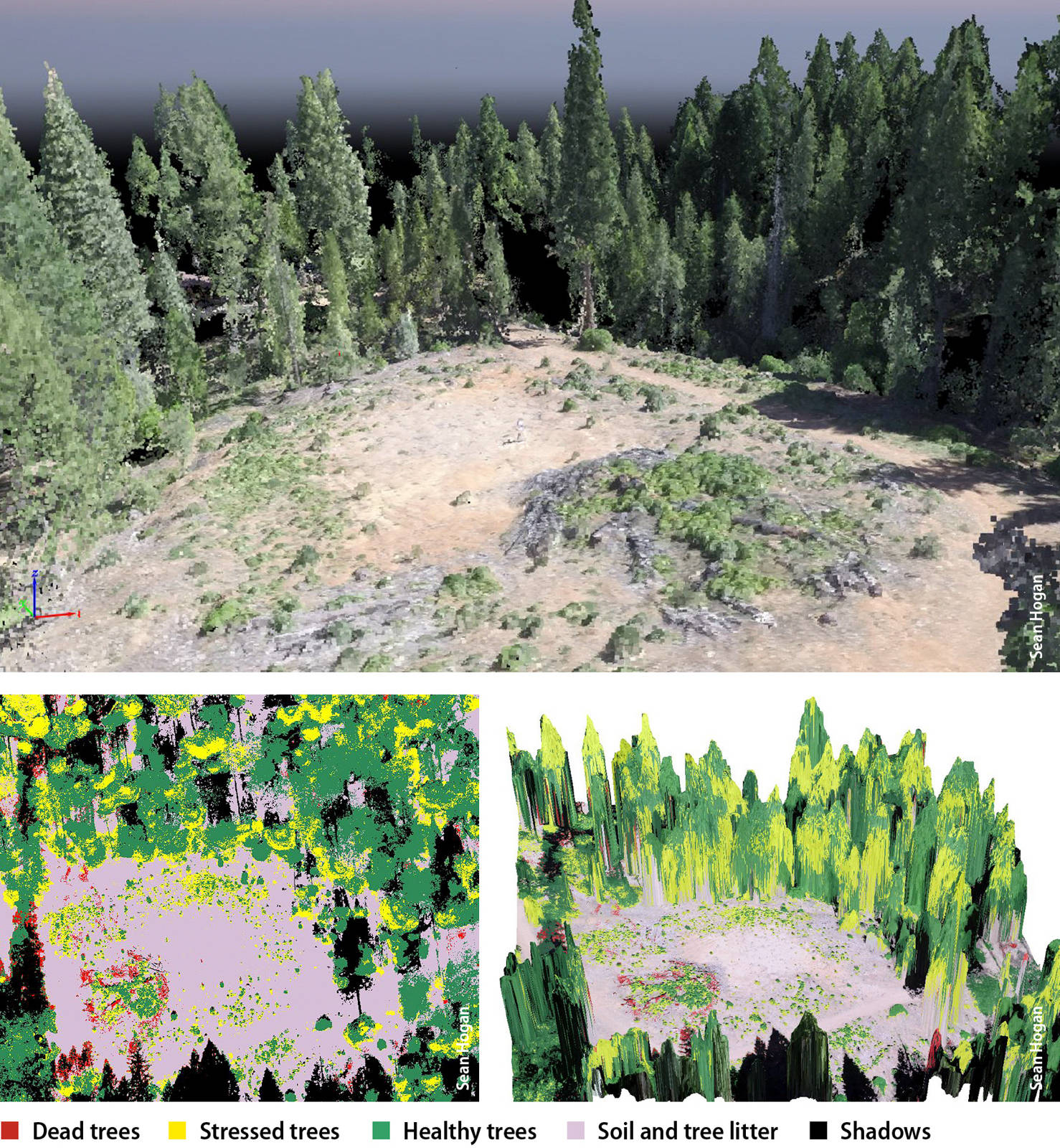

Detecting bark beetle infestations

The forests of California are threatened by drought and disturbance. Bark beetle (subfamily Scolytidae) infestations in the state's coniferous forests are a particularly large concern considering recent drought conditions, the threat of potential forest fires, and climate change. There is a need for both better methods for early detection of beetle infestation, and for visualization tools to help make the case for investments in suppression (Six et al. 2014).

Aerial photograph of trees in El Dorado County killed by bark beetles, taken from a DJI Inspire 1 drone with a DJI Zenmuse X5 camera.

High spatial resolution multispectral UAS imagery and 3-D data products have proven to be effective for monitoring spectral and structural dynamics of beetle-infested conifers across a variety of ecosystems (Mina?ík and Langhammer 2016). Sean Hogan of the UC ANR IGIS program is testing the use of machine learning algorithms applied to UAS imagery to efficiently classify early beetle infestations of ponderosa pines (Pinus ponderosa) in California's Sierra Nevada foothills. Preliminary results indicate that even imagery from a basic GoPro RGB camera can be used to accurately detect bark beetle–induced stress in these trees.

Photographic imagery gathered by a drone can be processed to enable early identification of trees stressed by bark beetles across a large area. The image at top shows a colorized point cloud, a 3-D representation of a stand of trees. The lower two images depict the same stand of trees (from slightly different angles); an analysis based on differences in foliage color is used to classify trees as healthy, stressed or dead. Images were collected with a GoPro 12-megapixel camera.

Rangeland ecology

Over 34.1 million of California's 101 million acres (33.7%) are classified as grazed rangelands (CDF 2008). The cattle industry contributes significantly to the state's economy, and the proper management of these rangelands is important for many reasons, including forage production, preservation of natural habitats and the maintenance of downstream water quality.

High-quality, timely information on rangeland conditions can guide management decisions, such as when, where and how intensively to graze livestock. UAS enable high-resolution aerial imagery of rangelands to be collected at much greater speed and lower cost than was previously possible. Translating that imagery into information that is useful to range managers, however, remains a challenge. A UC ANR team — including GIS and remote sensing academic coordinator Sean Hogan, UC Davis–based rangeland and restoration specialists Leslie Roche, Elise Gornish and Kenneth Tate, assistant specialist Danny Eastburn and Yolo County livestock and natural resources advisor Morgan Doran — is working on this problem from several angles at research sites in Napa County's Vaca Mountains, and in Lassen and Modoc counties.

Author Sean Hogan flying a DJI Inspire 1 drone for a rangeland ecology study at Gamble Ranch in Napa County.

-

Low-cost multispectral UAS cameras may be able to collect images that would enable the differentiation of common rangeland weeds — such as barbed goatgrass (Aegilops triuncialis) and yellow star thistle (Centaurea solstitialis) — from forage grasses. Efficient classification and mapping of such invasive weeds could help inform scientific research on weed treatments; it could also guide range management, for instance by allowing rapid assessment of forage availability, which could in turn guide stocking rates. In this study, data from manual ground surveys of weed and forage cover are being used to “teach” image-analysis software — via what's known as a machine-learning algorithm — how the spectral signatures of goatgrass and yellow star thistle differ from those of forage species at various times of year.

-

Cattle manure is a common source of bacterial contamination in California waterways. UAS imagery may enable precise mapping of the location and volume of manure deposits on the landscape. Such data could then inform models that predict likely fecal coliform loading in nearby streams.

-

Photographic imagery collected by UAS may enable estimates of forage production by measuring changes in grass height over time. The research team is comparing ground-level measurements of vegetation height with the results of digital surface models — very high-resolution topographic maps — generated from images captured by UAS-mounted cameras. The image-processing software uses a photogrammetric approach, which analyzes multiple overlapping images to generate precise elevation maps (Sean Hogan, UC ANR, igis.ucanr.edu).

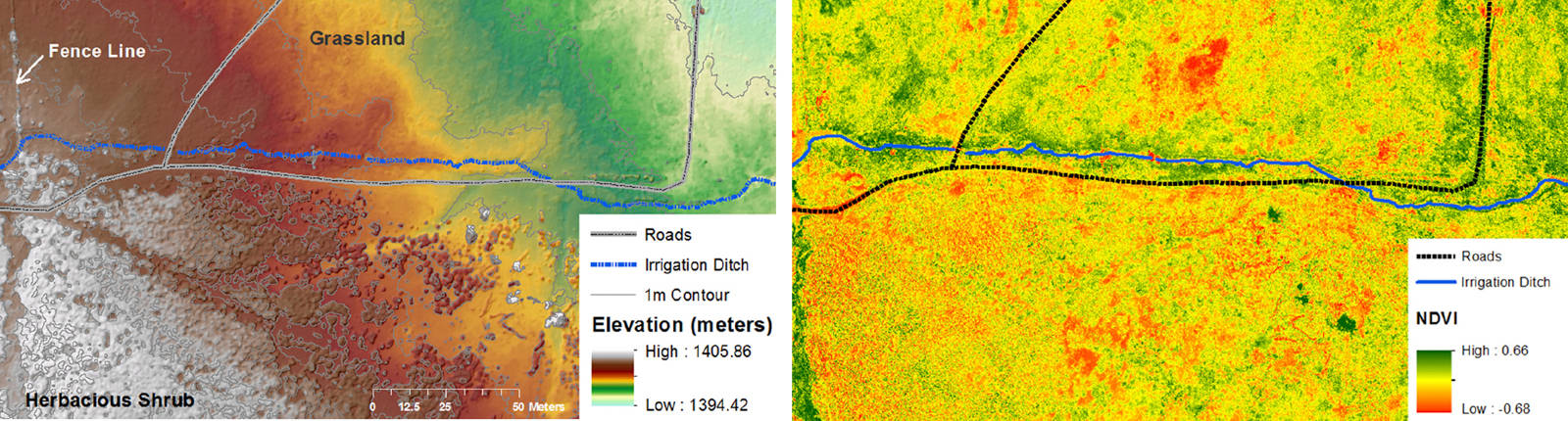

High-resolution imagery gathered from a drone can be used to assess rangeland condition and forage production. The above images show 12 acres of rangeland in Inyo County. The image at left is a digital surface model (DSM) with a resolution of 0.8 inches, generated from digital photographs. Fine resolution DSMs like this can be used over time to monitor vegetation growth, and hence forage production. The image at right, captured by a Parrot Sequoia multispectral camera, shows the normalized difference vegetation index (NDVI) at a resolution of 1.45 inches. The NDVI is a measure of the relative absorbance of near-infrared and visible light and can be used to distinguish green vegetation (shown in the image as green) from stressed, dying or dead vegetation (shown as yellow to red in the image).

Notice of revision: The sidebar titled "Regulations" was edited on December 4, 2019 to clarify FAA and UC requirements for UAS flight data logging and reporting.